What is robots.txt and Why Do You Need This File at All

It depends on robots.txt which pages appear in the search results. Therefore, it is extremely important for SEO and information security. What is this file and how to use it — read our material.

Contents:

1. What is a robots.txt file

2. Why do I need this file

3. robots.txt rules

4. robots.txt content examples

5. How to create robot.txt file

6. Conclusion

What is a robots.txt file

Robots.txt is a text document that contains rules for search engines to crawl a site. These instructions help Google and other similar services understand what content on the web resource can be analyzed and added to the index, and which can't be added to the index. The site owner can create such a file at any time and specify the rules he needs. Then search engines will be forced to comply with them.

Why do I need this file

Using robots.txt, you can block search bots from accessing any part of the site, web pages, and even individual files, be it images, audio, or videos.

Thus, it is possible to prevent these materials from being included in the search results. Then strangers will not see them. But there is a nuance. If a page you don't want to crawl is linked to from other sites, it may still appear in search results. To prevent this, you need to write the “noindex” rule in the code of this page.

If, on the contrary, you promote the site in search engines, but notice that either the entire resource or some of its pages do not fall into the search results, then in such situations, the first thing to do is check robots.txt. After all, it may contain a ban on scanning.

robots.txt rules

Site crawling rules — or instructions — that can be added to robot.txt are called directives. Let's look at the main ones.

User-agent directive (mandatory)

This directive opens a group of rules and determines which crawlers must follow all the rules of the current group. The syntax would be “User-agent: robot name”.

For example, if you want to create a group of rules for the Google search engine, you should write the directive as follows:

For Bing robot like this:

If you want to create a universal group of rules for all search engines at once, use an asterisk instead of the robot name:

Disallow and Allow directives (mandatory)

Each rule group must contain at least one of two directives: Disallow or Allow.

The Disallow directive points to a section of the site, page or file that the current crawler is not allowed to crawl. The syntax is “Disallow: path to a partition/page/file relative to the root folder.”

For example, to prevent the robot from crawling the hidden-page.html page from the root folder, add the following text to robots.txt:

And if you want to completely prohibit crawling of the site, you will need to write the directive as follows:

The Allow directive points to a section of the site, page or file that, on the contrary, is allowed to be scanned by the current robot. The syntax is “Allow: path to the partition/page/file relative to the root folder.”

This entry means that the current robot can only crawl the visible-page.html page from the root folder, and the rest of the site's content is not available to it.

Sitemap directive (optional)

The sitemap points to a sitemap, a file that tells you which pages to crawl and in what order. Thus, this directive makes indexing the site more efficient. The syntax is “Sitemap: full link to the sites map file.” Record example:

robots.txt content examples

For clarity, let's look at how different directives are written together. Below are examples of completed this files. Note: By default, crawlers are allowed to crawl any sections, pages, and files that are not blocked by the Disallow rule.

Example 1. We completely prohibit site scanning for the Google robot and add a sitemap:

Example 2. We completely prohibit site crawling for Google and Bing robots:

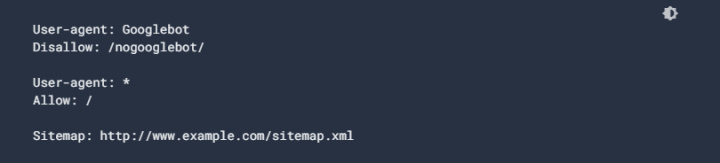

Example 3. In the first group, we prohibit the Google robot from scanning all links starting with “http://site.com/nogooglebot/”, in the second group, we allow full scanning for the rest of the robots:

For more information on syntax and directives, visit search engine websites.

How to create robot.txt file

If you're using a website builder like Blogger or GoDaddy, you'll likely be able to write directives right in your platform settings.

However, in most cases, rules need to be added in a separate file. Here's how to do it:

- Create a plain text file. This can be done, for example, in Wordpad or standard Windows Notepad.

- Add the necessary directives to the file and save it as “robots.txt”.

- Upload the ready file to the site directory. How to do this depends on the hosting you use. If you don't know, read the instructions for uploading files to your platform.

- Check the availability of robots.txt on the site. To do this, just dial site.com/robots.txt, where site.com is your site's domain.

Conclusion

- Robots.txt is a text document that contains rules for crawling a site by search engines.

- With this file, you can control how search bots can access any part of the site, web pages, and even individual files, be it images, audio, or videos. This way, you can prevent or allow these content to appear in search results. Therefore, robots.txt is very important for SEO.

- The site owner can create robots.txt at any time, specify the necessary rules and add the file to the site. Then search engines will be forced to follow these instructions.

Working with marketing and other Internet services involves many routine tasks: transferring leads from advertising channels to CRM, sending email or SMS newsletters, copying data between spreadsheets and so on.

But you can automate these and other workflows using our APIX-Drive platform. It is enough to connect your working services to it and set up data transfer — the system will take care of the rest for you. Try APIX-Drive, it's quick and easy!