Data Integration Reference Architecture

Data Integration Reference Architecture serves as a foundational blueprint for organizations seeking to streamline their data management processes. By providing a structured approach to integrating diverse data sources, this architecture enhances data accessibility, consistency, and quality. It enables businesses to efficiently harness data-driven insights, fostering informed decision-making and innovation. This article explores the key components and benefits of implementing a robust data integration framework.

Introduction to Data Integration and its Challenges

Data integration is a crucial process in modern enterprises, enabling the consolidation of data from disparate sources into a unified view. This process helps organizations enhance decision-making, improve data quality, and streamline operations. However, integrating data is not without its challenges. The increasing volume and variety of data, coupled with the need for real-time processing, make data integration a complex task.

- Data Silos: Isolated data systems hinder seamless integration and access.

- Data Quality: Inconsistent data formats and errors can compromise integration efforts.

- Scalability: As data grows, systems must adapt to handle increased loads efficiently.

- Security: Ensuring data privacy and compliance during integration is paramount.

Addressing these challenges requires a robust data integration strategy that incorporates advanced technologies and best practices. Organizations must invest in scalable infrastructure and adopt tools that support data cleansing and transformation. By overcoming these obstacles, businesses can unlock the full potential of their data, driving innovation and maintaining a competitive edge in the market.

Key Components of a Data Integration Reference Architecture

A Data Integration Reference Architecture is composed of several key components that ensure seamless data flow and management across diverse systems. The first essential component is the data sources, which can include databases, applications, and external data feeds. These sources provide the raw data that needs to be integrated. Next, the data transformation layer is crucial, as it standardizes and processes data into a unified format, making it suitable for analysis and storage. This layer often employs ETL (Extract, Transform, Load) tools to automate and streamline the transformation process.

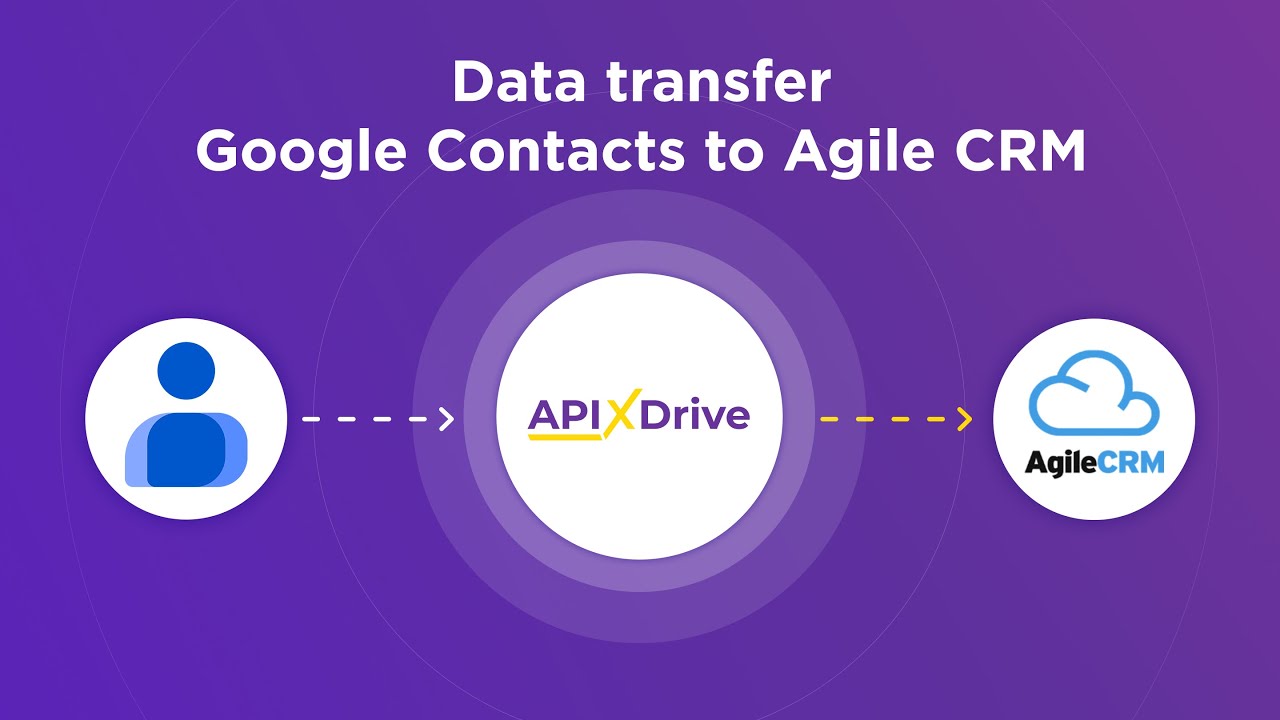

Another vital component is the integration platform, which acts as the backbone of the architecture, facilitating communication between disparate systems. Services like ApiX-Drive can be utilized to configure and automate integrations without requiring extensive coding expertise. Additionally, data storage solutions, such as data warehouses or lakes, are necessary for storing and retrieving integrated data efficiently. Finally, governance and security frameworks ensure that data is managed responsibly, with compliance to regulations and protection against unauthorized access, completing the architecture's framework for robust data integration.

Data Integration Patterns and Best Practices

Data integration is a crucial aspect of modern enterprise architecture, enabling seamless data flow between diverse systems. Effective data integration patterns ensure high data quality, consistency, and accessibility. These patterns help organizations manage data efficiently, fostering informed decision-making and innovation.

- ETL (Extract, Transform, Load): This pattern involves extracting data from various sources, transforming it to meet business requirements, and loading it into a target system.

- Data Virtualization: Provides a unified view of data from multiple sources without physical data movement, enhancing real-time access and analysis.

- API-Led Connectivity: Utilizes APIs to connect and integrate disparate systems, promoting agility and scalability.

- Event-Driven Architecture: Enables real-time data integration through event streaming, ensuring timely data updates across systems.

- Data Federation: Aggregates data from different sources into a virtual database, allowing centralized access and query execution.

Adopting these best practices in data integration ensures robust, scalable, and efficient data management. Organizations should prioritize security, scalability, and flexibility in their integration strategies to adapt to evolving business needs and technological advancements. By leveraging these patterns, businesses can enhance data accessibility, improve decision-making, and drive innovation.

Deployment and Operational Considerations

When deploying a Data Integration Reference Architecture, it is crucial to consider the scalability and flexibility of the chosen solutions. The architecture should support seamless integration of various data sources and accommodate the growing volume of data. Additionally, ensuring data quality and consistency across systems is paramount to the success of data integration efforts.

Operational considerations involve the monitoring and management of data flows to ensure optimal performance. Implementing robust error-handling mechanisms and real-time monitoring tools can help identify and resolve issues quickly. Security measures must also be in place to protect sensitive data and comply with regulatory requirements.

- Ensure scalability to handle increasing data volumes.

- Implement real-time monitoring for efficient operations.

- Establish strong security protocols to protect data.

- Facilitate seamless integration of diverse data sources.

In summary, a well-deployed data integration architecture should be adaptable, secure, and efficient. By focusing on these operational aspects, organizations can improve data accessibility and reliability, ultimately driving better decision-making and business insights.

Future Trends in Data Integration

As data integration continues to evolve, several trends are shaping its future. One significant trend is the increasing adoption of artificial intelligence and machine learning to automate and optimize integration processes. These technologies can enhance data quality and streamline workflows, making integration more efficient and reliable. Additionally, the rise of cloud-based integration platforms is transforming how organizations manage their data. These platforms offer scalability and flexibility, allowing businesses to integrate diverse data sources seamlessly.

Another emerging trend is the focus on real-time data integration, driven by the need for immediate insights and decision-making. This shift is supported by advanced tools and services like ApiX-Drive, which simplify the integration process by connecting various applications and automating data flows. Furthermore, the growing emphasis on data governance and security is pushing organizations to implement robust frameworks to protect and manage their integrated data. As these trends continue to develop, businesses will need to adapt to maintain a competitive edge in the data-driven landscape.

FAQ

What is Data Integration Reference Architecture?

What are the key components of a Data Integration Reference Architecture?

How does Data Integration Reference Architecture ensure data quality?

What challenges can arise during data integration, and how can they be addressed?

How can automation be leveraged in Data Integration Reference Architecture?

Time is the most valuable resource for business today. Almost half of it is wasted on routine tasks. Your employees are constantly forced to perform monotonous tasks that are difficult to classify as important and specialized. You can leave everything as it is by hiring additional employees, or you can automate most of the business processes using the ApiX-Drive online connector to get rid of unnecessary time and money expenses once and for all. The choice is yours!